Introduction

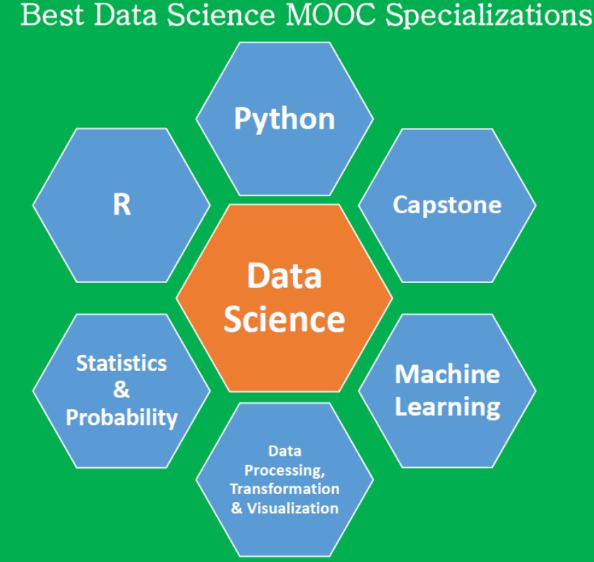

Topic modelling is a widely used technique in natural language processing (NLP) for uncovering hidden thematic structures in large collections of textual data. One of the most prominent algorithms for topic modelling is Latent Dirichlet Allocation (LDA). Introduced by David Blei, Andrew Ng, and Michael Jordan in 2003, LDA is a generative probabilistic model that represents documents as mixtures of topics, where each topic is characterised by a distribution of words. This article explores the concept of LDA, its working mechanism, applications, and details of implementation. Learning this in a Data Science Course can be particularly beneficial for mastering this powerful technique.

What is Topic Modelling?

Topic modelling is an unsupervised machine-learning technique used to enable automatic identification of themes or topics in a collection of documents. Unlike classification or clustering, topic modelling does not require labelled data. Instead, it relies on the statistical relationships between words to infer latent structures.

For instance, in a dataset of news articles, a topic modelling algorithm might identify themes like “politics,” “sports,” or “technology” based on the frequency and co-occurrence of words within the text. Understanding these structures is often a focus in a Data Science Course, as it helps in summarising large datasets, organising content, and discovering patterns that are not immediately apparent.

Understanding Latent Dirichlet Allocation (LDA)

LDA is based on the idea that each document is a mixture of topics, and each topic is a mixture of words. The main assumptions in LDA are:

- Documents as Topic Mixtures: Each document consists of a probabilistic distribution over a fixed number of topics. For example, a political news article might be 70% about “elections” and 30% about “economics.”

- Topics as Word Distributions: Each topic is represented by a probability distribution over words. For instance, a topic about “sports” might assign higher probabilities to words like “game,” “team,” and “score.”

- Bag-of-Words Representation: LDA operates under the bag-of-words assumption, ignoring word order and syntax focusing solely on word frequency.

The core mechanism of LDA revolves around Dirichlet distributions, which act as priors for the probability distributions of topics and words. These priors encourage sparsity, meaning that a document will predominantly belong to a few topics, and each topic will heavily favour a subset of words. In a career-oriented data course such as a Data Science Course in Mumbai tailored for professionals, the basic mechanism of LDA is thoroughly detailed so as to provide learners with a solid foundation for working with large text corpora.

How Does LDA Work?

The LDA algorithm involves the following steps:

- Initialisation: The algorithm initialises the distribution of topics for each document and the distribution of words for each topic with random probabilities.

- Topic Assignment: Each word in a document is assigned to a topic based on the current topic-word and document-topic distributions.

- Parameter Estimation:

o Topic-Word Distribution: Updates the probabilities of words belonging to each topic based on the current assignments.

o Document-Topic Distribution: Updates the probabilities of topics for each document based on the current assignments.

- Iterative Refinement: Steps 2 and 3 are repeated iteratively until the model converges, meaning that the topic assignments stabilise.

The output of LDA includes:

- Topic-word distributions: Probabilities of words for each topic.

- Document-topic distributions: Probabilities of topics for each document.

Hands-on projects in a Data Science Course often include implementing these steps to gain practical experience with LDA.

Applications of LDA

LDA has found applications in various domains due to its ability to extract meaningful patterns from text. Some of the key applications include:

- Document Clustering: Grouping documents based on their thematic similarity.

- Recommendation Systems: Providing personalised recommendations by identifying user interests through topic distributions.

- Sentiment Analysis: Enhancing sentiment classification by incorporating topic-level features.

- Search and Information Retrieval: Improving search relevance by indexing documents based on latent topics.

- Content Summarisation: Extracting key themes to generate concise summaries of large text corpora.

These applications are commonly explored in a Data Science Course, providing insights into real-world use cases of LDA.

Advantages of LDA

- Scalability: LDA can handle large datasets efficiently.

- Interpretability: The probabilistic nature of LDA makes the extracted topics easy to interpret.

- Flexibility: LDA can be applied to various types of text data, including short texts and long documents.

Challenges and Limitations

Despite its strengths, LDA has certain limitations:

- Bag-of-Words Assumption: Ignoring word order can lead to loss of contextual meaning.

- Number of Topics: LDA requires the number of topics to be specified beforehand, which may not be intuitive.

- Computational Complexity: The iterative nature of LDA can be computationally expensive for very large datasets.

- Sparse Data: Performance can degrade for highly sparse datasets.

Implementing LDA

LDA can be implemented using popular NLP libraries such as Gensim and Scikit-learn in Python. Below is a high-level implementation using Gensim:

python

from gensim import corpora

from gensim.models import LdaModel

# Sample dataset

documents = [

“Artificial intelligence is the future of technology.”,

“The sports team won the championship.”,

“Economic policies affect global markets.”

]

# Preprocessing: Tokenisation and stopword removal

texts = [[word for word in doc.lower().split()] for doc in documents]

# Create a dictionary and a corpus

dictionary = corpora.Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

# Train the LDA model

lda_model = LdaModel(corpus=corpus, id2word=dictionary, num_topics=2, random_state=42)

# Print topics

for topic_id, topic in lda_model.print_topics():

print(f”Topic {topic_id}: {topic}”)

Optimising LDA

To enhance the performance of LDA:

- Preprocessing: Remove stopwords, lemmatise words, and ensure clean text.

- Hyperparameter Tuning: Experiment with the number of topics, alpha, and beta parameters.

- Evaluation: Use metrics like coherence score and perplexity to evaluate topic quality.

Conclusion

Latent Dirichlet Allocation is a powerful tool for topic modelling that uncovers latent structures in textual data. Its ability to represent documents and topics as probabilistic distributions makes it versatile for a wide range of NLP applications. While it has certain limitations, advancements in preprocessing techniques and the integration of deep learning models are addressing these challenges, making LDA an enduring technique in the ever-evolving field of NLP. An inclusive technical course in data sciences such as a Data Science Course in Mumbai, equips learners with practical skills in LDA that are highly valuable in the modern data-driven world.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354

Email: enquiry@excelr.com